Global Ingress Gateway

Global Ingress Gateway with Istio, Envoy and Kubernetes. Published on 24.02.2022This was supposed to be Spot The Difference, a continuation to my previous blog post of running production workloads in spot instances. However due to adverse market conditions and the ensuing geo-political tensions, Indian markets have not been particularly doing well. I decided this is not the correct time to deploy a bot that works like Wojak. So for that reason, Spot The Difference has been postponed until the markets stabilize.

However, I have an interesting blog post today. This is an experiment that I've been trying out over the last few days and the results are quite interesting.

I've been playing around with istio, envoy and its commercial flavors offered by cloud platforms(AWS App Mesh, Google Cloud Traffic Director), and in that process, I got an interesting idea. The GLOBAL ISTIO INGRESS GATEWAY.

This idea is inspired by how Cloudflare, Fastly and Akamai work. Basically this is a lightweight(but powerful) version of their proxy and ssl offloading feature combined with a bit of custom routing and traffic management offered by istio.

To establish more context, think of a scenario like this. You have a bunch of datacenters(cloud or otherwise) around the world and you were running apps in Kubernetes. All of datacenters are connected by either fiber or through an internet exchange.

You are running apps on these clusters and some of them are exposed to internet for external access, tls/ssl termination, health checks and failover to another region/data center automatically. How would you do it?

Istio(and by extension, envoy) could be a good contender for this problem. Let me explain.

Before we get started, lets broadly talk about a few concepts of istio.

-

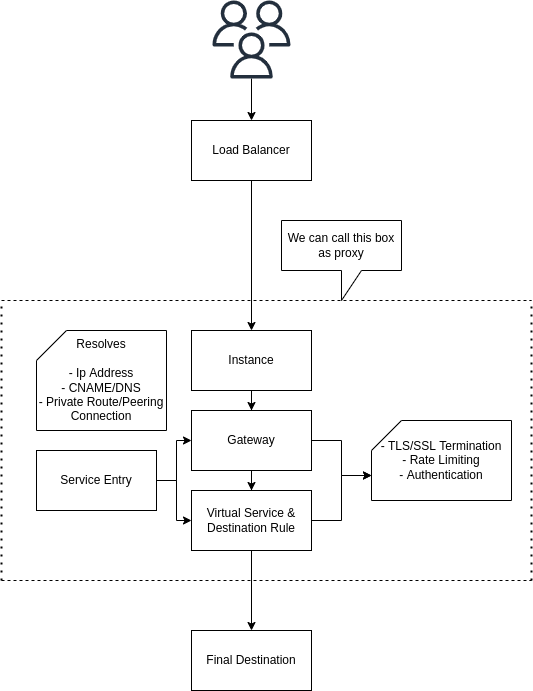

Virtual Gateway, Virtual Service and Destination Rules are used to direct traffic from the source to destination, enabling us to modify headers, body, paramters, etc. For the sake of the blog post, I will refer to this as proxy.

-

Service Entry is used to store the destination endpoint. It acts like a DNS of sorts and resolves a domain(host) to an ip, a cname record or a service in the kubernetes cluster.

Both of these combined with envoy's ability to dynamically load configuration and resources gives us a pretty powerful proxy for our applications. The following diagram illustrates it better.

Dark screen warning: Bright colors ahead. Watch out.

A very basic example of using istio for this use case looks something like this.

Here, we are proxying the domain myapp.backendengineer.net to an application that's hosted in a VPC(10.128.0.5) that is peered with the proxy. The dns of myapp.backendengineer.net will be pointing to proxy.backend.engineer and the traffic route will be managed by istio.

Looking at the fact that service entry can resovle and dns record, we could have it to be a public ip of a server, a peering connection or any other endpoint. It is using this property that we can levarage istio to be a global proxy and add to it, its region based failover, rate limiting and routing, we are somewhat entering cloudflare and fastly territory here. Last time I checked, it's free real-estate. So, lets go ahead.

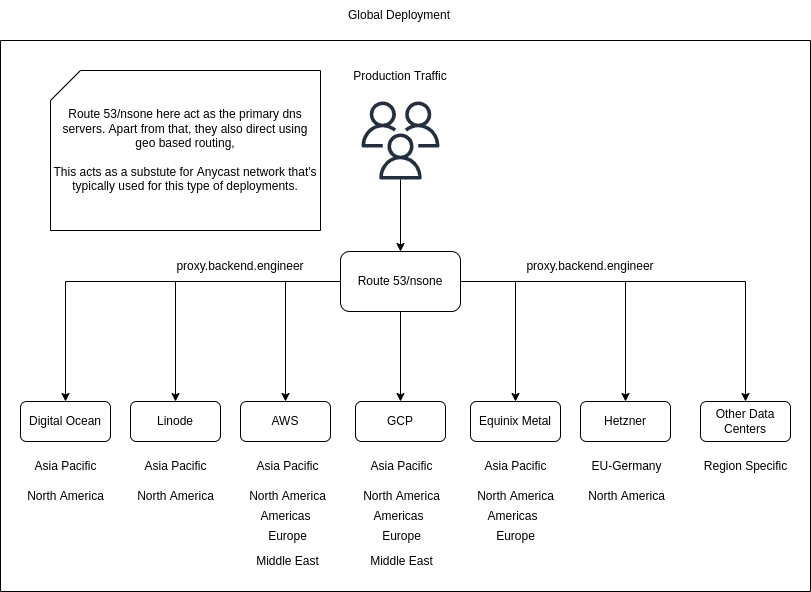

I ended up doing a multi cloud deployment like this.

Typcially deployments like this have an anycast ip with data passing through a private(fast) internet backbone with BGP Routing.

However, I dont own an ASN or an ip range. So we will cheat a little bit and go with geo dns resolution. The only problem is that traffic will go over public internet and hence the performance(when compared to cdn providers) will be magnitudes slow. There are ways to address this, but for now, I will pretend this problem does not exist, for now.

I leveraged DNS for 2nd level of health checks at the region level and geo location based routing to address this.

On a side note, if you are reading this blog post, and you own an ASN and an ip range, and if you are willing to experiment with it, please do reach out. I'd be more than happy to collaborate for this project.

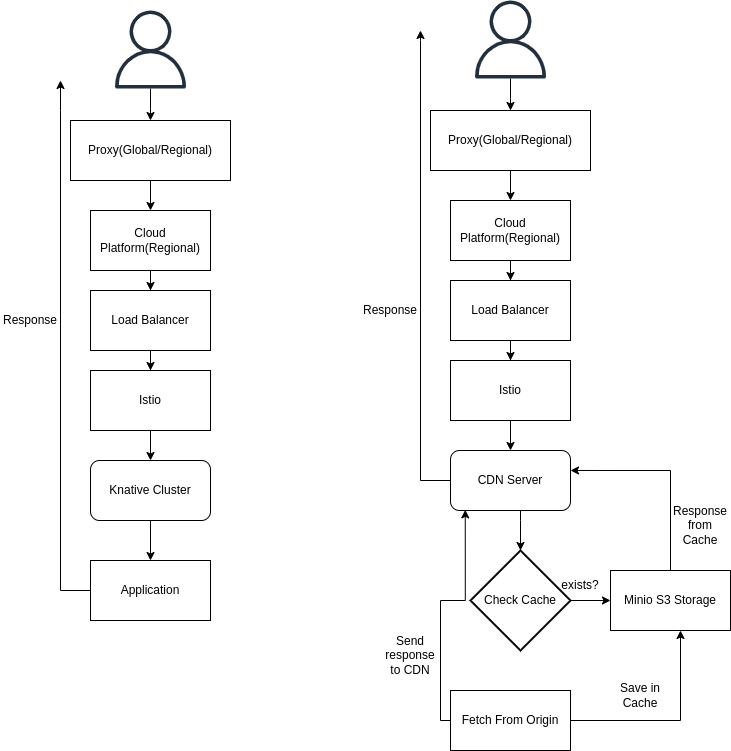

Now that we have a global proxy with TLS termination, Rate Limiting and Failover, lets try and extend this for more developer applications. For starters, I can think of the following

- Using KNative or OpenFaaS to run serverless workloads.

- Using Minio or any object storage system to serve as a cache.

Architecture of those services would look something like this.

The possibilities for more applications are endless because you have an entire kubernetes cluster at your disposal.

I am not touching the security aspects of the application right now. That will be in another blog posts where I talk about WAF, mTLS, Zero Trust and Certificate Management. But generally speaking, if one had the money and resources to deploy a global kubernetes cluster, he/she would have enough money to either get a hardware(F5, fortinet, etc) or a cloud provider's solution, as this is an area that's best left for the experts.

Closing Notes

I typically write long blog posts, but for today, I will close it early and leave you with this thoughts.

So, after all of this, is it worth going through the effort?

People who've been using Cloudflare, Akamai or Fastly will continue to use it. Same applies to companies that use Cloudflare's SSL for SaaS and other offerings. The TCO for something like this is too high.

However (big) consumer companies that started the move to public and hybrid cloud, could see this as a viable option. The era of 5G and edge computing will only acclerate this with telecom providers offering these services.

If it were to me personally, I'd not want to do it again, unless its needed. Its just way too much effort to set and manage this.

What do you think?