Rise of a market participant

Click here to skip to the system architecture. Otherwise, enjoy the evolution story.

Capital markets have been a part of life for a very long time. That's probably because a part of my extended family and friends are investment bankers. But I found traditional trading or investment banking operations rather boring, so much that I quit my career as a trader and took up software engineering.

Dont get me wrong, I dont hate it. I love the concept of Equity and Derivatives markets. Its just that back in the day when I thought software engineering was rocket science and all I knew was selling options over the counter was the way to live life, that thought sounded very boring. However, I had a reality check early on that building software was actually easier(or so I thought).

I took a course in C++, IBM Mainframe and IBM DB2. I knew how to write software but I didnt know what to do with that knowledge. That's when I got the idea for this project to build a simple market participant to act as a market maker for my trades.

That started a journey of a C++ program's rise from a simple order book analysis engine to a market participant on the indian stock exchanges. This is also my first personal project that was completed and deployed. For about 50 or so personal projects that I started, only 3 ended up completed and in production. For that reason, this has a special place in my heart.

In this blog post, we will talk about the architecture of the application and the infrastructure that powers it and how it evolved from a mainframe to a (somewhat)serverless system that runs in AWS and eventually to a hybrid/multi cloud deployment across 2 clould platforms and an on-premises datacenter.

A quick disclaimer before going forward. This blog post does not talk about the trading stratergies or techniques employed by the program. Generally, I do not talk about stocks, scrips, trading stratergies or trading algorithms in a public or a private forum, unless for certain occations that I see fit, for reasons compliance and otherwise.

This blog post is not to encourage anyone to trade in the capital markets. Infact I strongly recommend that you do not to enter the markets unless you know what you are getting yourself into. If the odds are against you, then you would be playing a mental squid game with yourself, likely throughout the rest of your life.

Another mandatory disclaimer. I am not a SEBI or any other registered investment advisor or professional. In a way, I am as qualified as a degenarate from r/wsb .

The reason I am writing this post is because I think a similar architecture can be used for a majority of web and mobile application backends with a few tweaks in either the services or the flow or both. Without further delay, lets start. The trading system is just an example of how I used the different services.

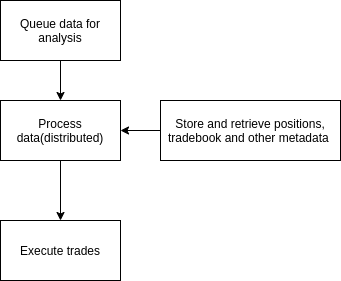

Broadly, the system has 4 components.

- Acquire data on scrips

- Process the data(ideally in a distributed fashion) and make a decision.

- Store this decision for future reference

- Execute the decision

That's pretty much all you need. The diagram above is basically how I ran the application in the IBM mainframe. It was probably the worst system when it comes to usability but the best system when it comes to speed and reliability. Eventually I lost access to the mainframe for reasons clearence and otherwise. I wanted to get one for myself, but the price tag for capex and opex made me change my mind rather quickly.

It was around this time that I was introduced to AWS, containers, Javascript and golang. While the foundation remained the same, the deployment paradigm changed. After multiple iterations, debugging and testing in mid 2019 right before my graduation exams the system went live.

In the due course, all it needed was a few tweaks and changes but other than that, It has been going strong till date.

The Cloud

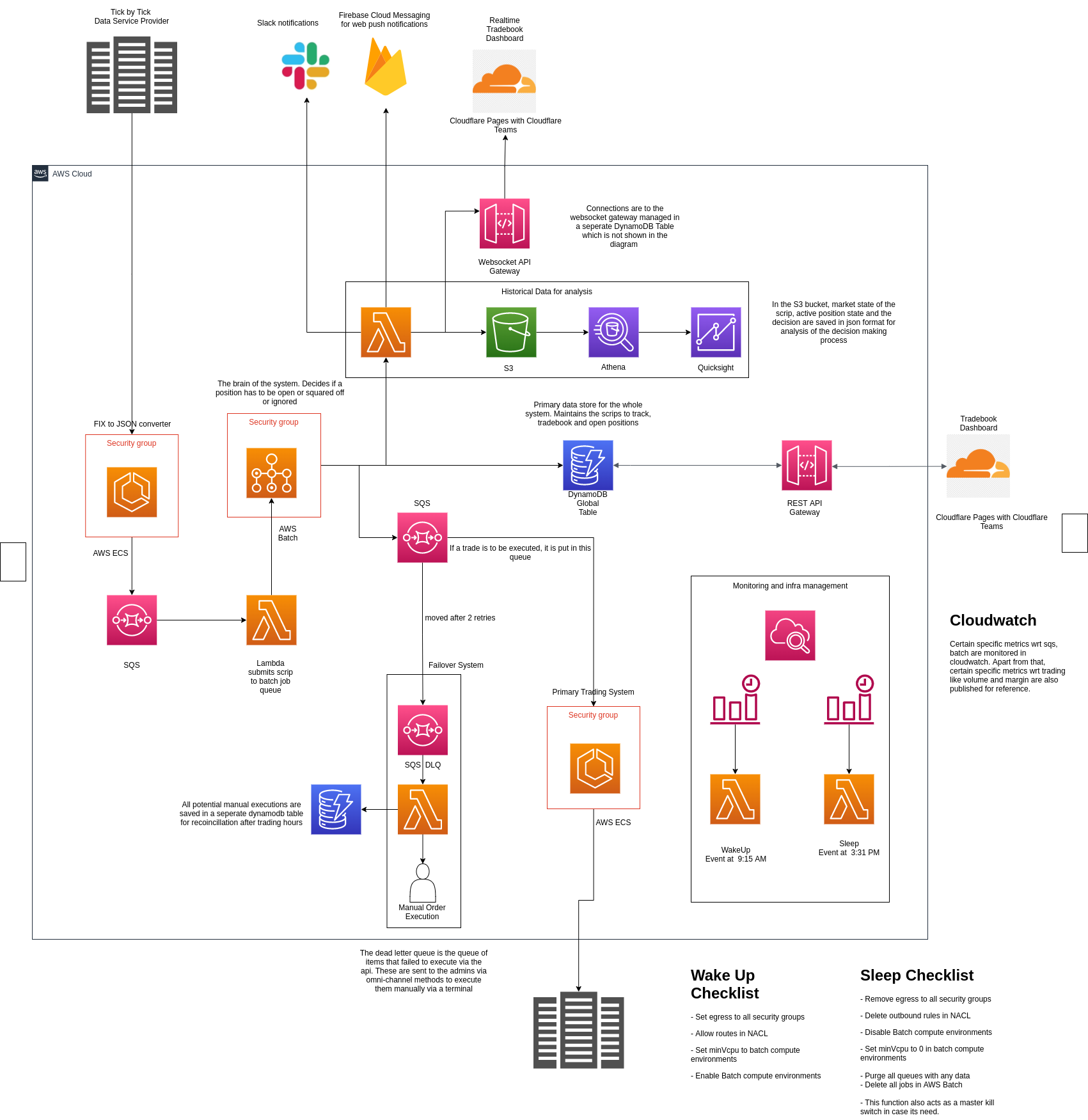

A high level architecture of the trading system is below. Certain trivial services are removed for the sake of brevity.

Unlike high frequency trading and other volume based trading algorithms, this is designed to trade into volatility. The algorithm is designed to carry forward positions until expiry of square off on a nominal price. It is because of this nature that this system mimics a covered call.

The algorithm trades on the Nifty50, BankNifty indices and its constituents on NSE, India. The reason for this choosing is because the index and its constituents are all traded in futures and options and thus give us a better spread and liquidity for hedging.

The infrastructure looks like this

The following are certain important components of the system

FIX to JSON Converter: Workflow starts here. Ingests a FIX message from a data provider and converts it to a custom JSON format and saves it in an SQS queue. We use 3 data providers for redundancy of which one provides messages in FIX while the others provide messages in JSON. Messages are ingested in the order of priority which is pre-defined.

Lambda processor to AWS Batch: The message from SQS is ingested by a lambda function. The values in the message are set as environment variables and submitted to an AWS Batch job queue.

AWS Batch Job: Codenamed brain, this system is responsible for understanding the order book for the scrip and making a decision to either open/close or maintain an existing position. It also acts as the primary risk management system for the open positions. The reason for using AWS Batch is that it lets you play around with compute environments be it spot, on-demand or fargate. The other reason is that once a job is submitted, if a decision is not taken within 10 min, then the job has to be terminated. If an error occurs, the job has to be automatically re-queued. All of this is managed natively by AWS Batch with no extra effort or programming.

Trade Execution System : This runs on ECS. Once the brain takes a decision, it is sent to the trading system, which is connected to a broker, via an SQS queue. The request is tried 2 times after which it is sent to a dead letter queue. Items in the dead letter queue are sent to the admin via omni channel methods(call, sms, push notification, slack, email) and the admin is required to execute this order manually via the broker's terminal.

The execution system was initially codenamed terminal but after the crypto meme boom of 2020-2021, I decided to name it sminem. Sminem is regarded as the big bull of the crypto memeverse, whose goal is to take the markets up. Considering the current bull market scenario, its only fitting to name the execution system after the legend.

DynamoDB: The watchlist and tradebook are all maintained in a DynamoDB global table. This is replicated to 2 regions, Mumbai and Singapore. It is a mission critical part of the infrastructure as other systems rely on this as a source of truth for the current state of positions and the trade book. The data with the broker is synced with the db via a recoincilation job after the market hours.

CloudWatch and CloudWatch Events: The events control the sleep and wakeup cycles of the system. They perform the tasks in the checklist and exit gracefully. The sleep script is also used as an emergency kill switch(circuit breaker) to suspend automated trading. Cloudwatch dashboard shows certain key metrics like queue size, errors and other metrics from AWS Batch.

The circuit breaker was used only once during the crash of 2020. The system went offline for 3 weeks and came online once the market cooled off. I have to admit, disabling the network is the fastest and the most satisfying way to kill an application.

Web Dashboards: They show the latest trade book and live positions to the admin. All data is sent via the AWS API gateway for both realtime(using websockets) and static using api gateway as a proxy for dynamodb. Hosting, Authentication and Authorization are handled by cloudflare using Cloudflare Pages and Cloudflare Access

In my opinion, zero trust is a very interesting paradigm that once can incorprate into many of the admin facing workloads and in some cases the customer facing applications as well using mtls. If I am being honest, for this application, no one(including me) use these dashboards. They serve no practical purpose except probably for signage.

Application Analytics : This is a very important component of the application. All decision data is logged in S3. This data has 4 JSON objects namely scrip, marketState, currentPositionInfo, decisionInfo. These are logged in s3 and are queried by the developers and IBs' to understand the decision making process of the brain. If its not what is expected, then the weights are adjusted in the watchlist for trading the next day.

The decision is relayed to admins via Push Notification, Slack and also to the realtime dashboard. Unless the market is extremely volatile, this is not really monitored. If an incorrect decision is observed, the circuit breaker is activated as a precautionary measure.

Apart from the ones mentioned in the diagram, there are a few post trade reconciliation jobs that run to update the database.

High Availability

The system runs in 2 AWS regions, Mumbai and Singapore. The primary region is Mumbai and the failover region is Singapore. Route53 health checks are deployed in Singapore to check for Mumbai's and when down, an SNS notification is triggered to wake up the infrastructure in Singapore.

As an operating procedure, DynamoDB even though is replicated in Singapore, is verified with the broker's system and updates to the trade book and open positions are made and then trading is resumed from this region.

Every quarter, one day of trading is conducted in Singapore to test the systems operational performance and live switch over capabilities.

What Next?

In the early 2021, we decided to move to a multi cloud architecture for the brain(the decision making algorithm). The reason being, the brain acts as a primary risk management system for all active positions and going multi/hybrid cloud gives more options on business continuity in case of a region outage.

What followed was a fact finding mission of building a kubernetes centered deployment across AWS, GCP and on-premises. We ended up building a 40U HPE rack, colocated it in a datacenter in Mumbai just to test the on-premises theory and much to our surprise it worked.

However we later moved to DigitalOcean as an alternative to on-premises as ops was not possible with just 3 people. Hands off and autonomous is the name of the game and cloud was the only way of making that happen.

I will write about that in a couple of blog posts over the next few weeks and if possible make some explanatory videos.

If you have any ideas or suggestions that you want me to try, feel free to hit me up either via linkedin or the form in the home page or drop me an email at hello@backend.engineer. I will be more than happy to try them out.